Is the book better than the movie ?

Can we finally be sure that The Hobbit is, from far, better as a book ?

Introduction

Today, many Intellectual Properties are declined into multiple supports. For example, books are turned into movies, series, theatre plays, video games; or the other way around. Each platform has its own specificities and their audience may have different expectations. We will focus on books and movies/TV. This common debate is really animated, who has never heard after watching a movie that : “It was good but I preferred the book” ?

We would like to find out what are the differences and similarities between these supports. Are books better rated than movies? Does the price or the time impact rating ? Can we identify different consumer profiles? We intend to use the Amazon products dataset. The reviews will help us to derive interest for a product. We are also able to find people who gave a review for movies and books of the same franchise aswell are the sentiment of their reviews.

Summary

- Initial data

- Filtering

- Sentiment analysis and final data

- First overview

- Clustering reviews

- Categorising users

- Mentions of the book or the movie

- Users buying all the products

- More criteria

- Conclusion

Initial data

We used the Amazon dataset, especially reviews and metadata for books and Movies_and_TV categories. On one hand we have reviews, containing for example the score(referred as “overall” in the following), the review content and the user idea, on the other hand we have the metadata for a product with the title, the price or the description.

Books

Movies

Filtering

Now that we have gathered the data, we have to filter it. In order to get meaningful results,

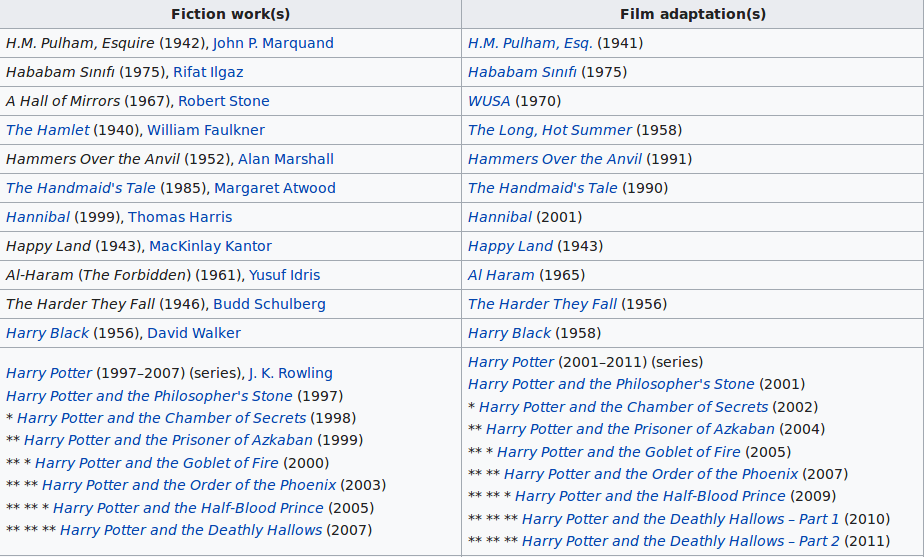

we have chosen to scrap wikipedia to obtain associations between books and movies.

The complex task is then to associate a title collected via wikipedia with an Amazon product id.

Let’s take an example : we want to match the movie The Three Musketeers with the product The Three Musketeers (Golden Films) [VHS]

As it is not necessarily enough, we also take care of accents, punctuation, keywords such as

As it is not necessarily enough, we also take care of accents, punctuation, keywords such as dvd or vhs or badly encoded character

We then keep only movies and books for which we could manage to find at least one matching product for both.

Books

Movies

Sentiment analysis and final data

Using the vader package we gave to each text review a value between 0 and 1 (originally between -1 and 1) representing its overall sentiment :

- Between 0 and 0.25 the review is negative

- Between 0.25 and 0.75 the review is neutral

- Abose it is positive This analysis take into account negations, punctuation (!!!), word-shape, emoticons acronyms and so on, which are often used in our reviews.

Before ending the data handling part, we also computed a special subset containing only books reviews which can be matched with a review about a paired movie made by the same user (and vice versa). This subset is composed of 2000 users and 3000 paired reviews.

First overview

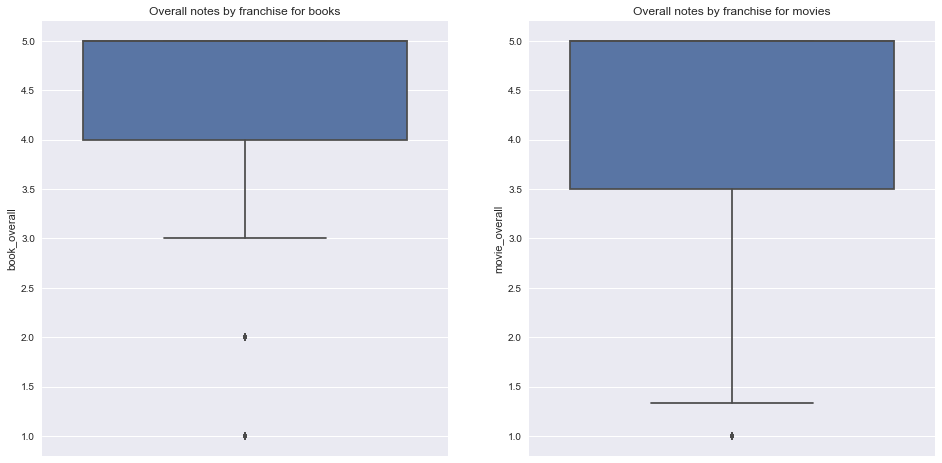

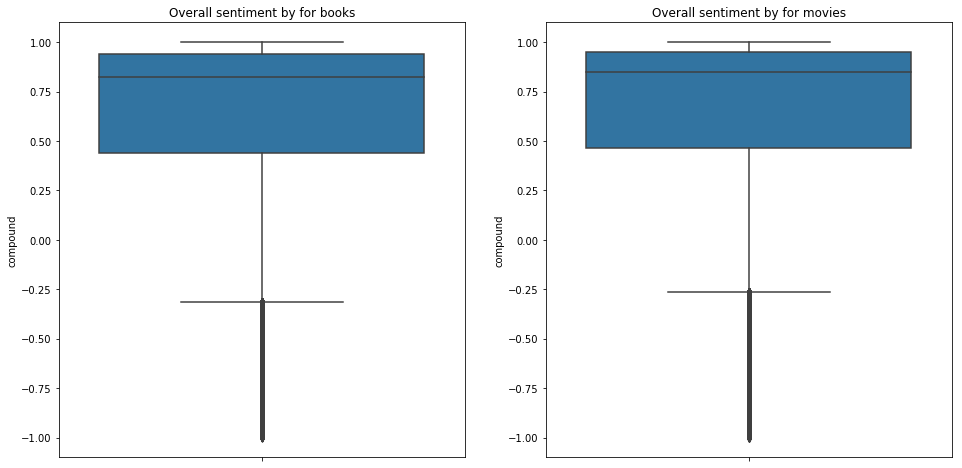

Now that we have treated the data it is time to begin the analysis in order to answer our initial question : is the book better than the movie?

It seems that when we group them by franchise, books have higher overall scores and less variance than movies, moreover the first quartile is clearly higher for the books.

When we look at it as a whole, positiveness of sentiments are really comparable, even slightly better for the movies.

But it would be way too simple to keep these results and conclude, so let’s analyse the bias and the major factors that affect our scores and reviews.

Clustering reviews

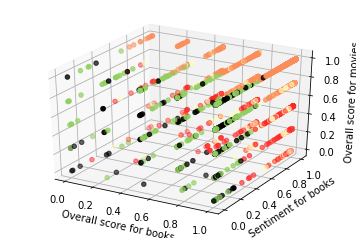

You might think that when you read a book and see a movie, you always prefer one so you will give a good score and positive review to one and worse score and review to the other, but is it always that strict ?

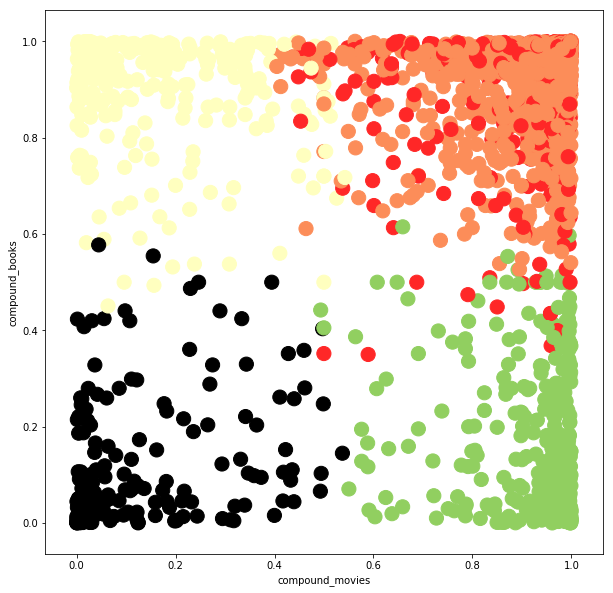

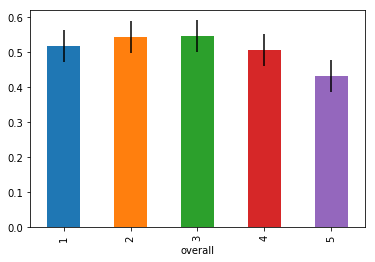

We have used the Kmeans clustering method to try to answer this question. We have decided to fix the number of categories to 5 and we used the combination of overall scores(overall) and sentiment measurement (referred as compound) for both movies and books as a metric.

It is not so easy to have a clear overview of the result because we use 4 dimensions but here is a summary :

Let’s expand a bit to have a better understanding of the clusters, here we only selected the projection on the sentiments.

We clearly see the difference between at least 4 clusters.

If we look at the meaning of each cluster we obtain something close to this :

- Cluster 0 (in orange): good scores and reviews for movies and books

- Cluster 1 (in yellow): Bad review and bad score for movies, good review and score for books

- Cluster 2 (in green): Generally good but negative reviews for books

- Cluster 3 (in red): Bad score for movies

- Cluster 4 (in black): Bad reviews for both movies and books

Categorising users

But everyone knows that there is always this guy, who always has read the book and always criticises the movie !

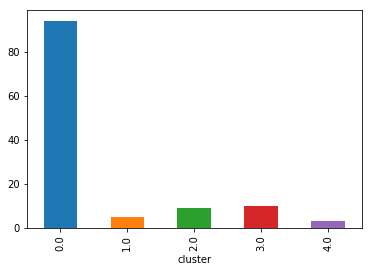

Do people who give many reviews always belong to the same cluster ?

We can see a big majority for cluster 0: people who give good scores and reviews for both movies and books in a review tend to do the same for all franchises. However we can not really say that users always give bad scores for movies (clusters 1 and 3)

Mentions of the book or the movie

One thing that is sure, is that if you have read the book and have watched the movie, a bad score is obviously influenced by the other one.

Do the worst/better scores and reviews mention the book/movie ?

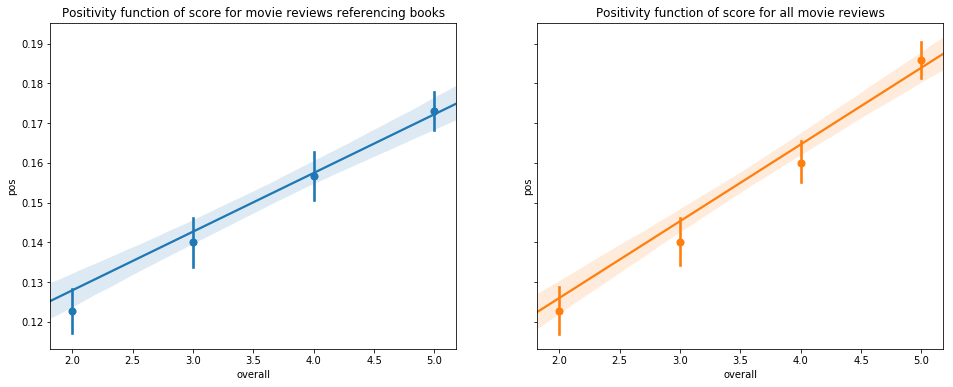

First we will try to see the evolution of positiveness of the comment whether it mentions the books or not.

It appears that people give more positive review when they don’t talk about the book, maybe because they enjoyed the film without comparing it to the book ? (this is not really significant when they give reviews to the books).

When we look at the difference between scores, there is indeed something to notice, it seems that people give worst scores when they mention the book or the movie.

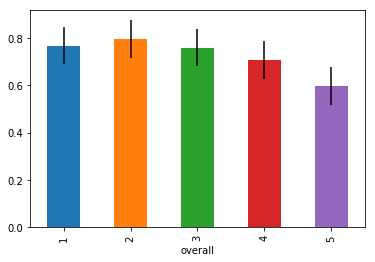

The first graph represents the percentage of movie reviews that mention the book per score, e.g : about 80% of scores 2 talk about the book. The second one represents the percentage of book reviews that mention the associated movie.

Users buying all the products

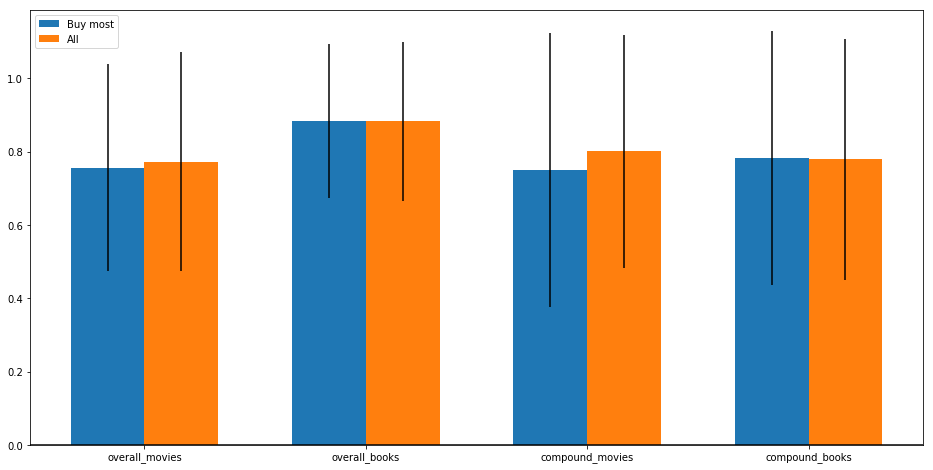

Like for Star Wars, there are people buying all products linked to the same movie, they must give really good scores!

Well this criteria is not really impacting :

It is really difficult to draw a conclusion here, taking the error bars into account. It seems really close, maybe large buyers(people who buy more than 50% of the products when there are more than 2 products) give slightly worse scores.

More criteria

Ok, you won.. Now just tell me if the book is better than the movie

Before trying to give an answer to our initial question it remains a few important criteria to analyse.

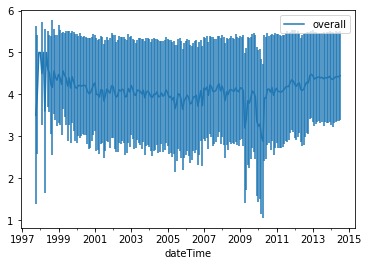

Do the scores and reviews changed during time ?

Since we have data over a pretty long range of time (1996-2014), it is interesting to look at the evolution of scores and reviews. However we lack some contextual information that could help us interpret the results with more confidence, for example for this loss in 2010 (the graph shows the overall scores for movies).

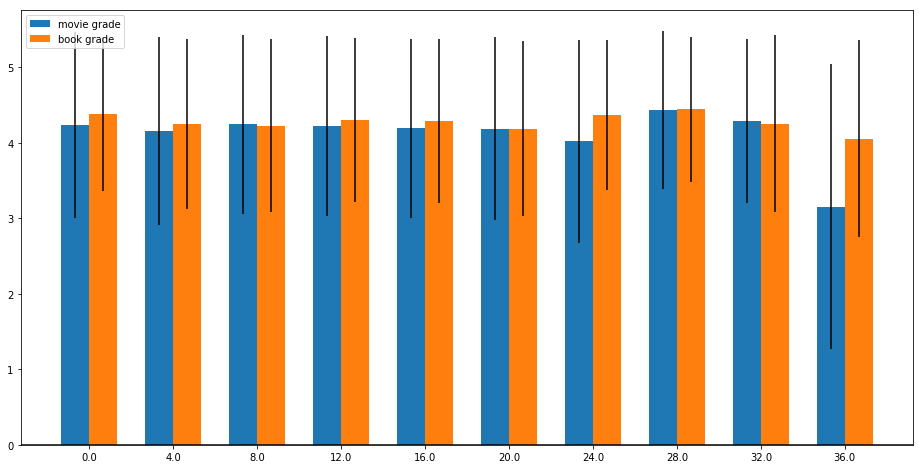

Order of the reviews

We have studied the impact of the order of the reviews for the same franchise without noticing any clear difference. That means that people does not tend to give better scores or reviews for a book whether they have already watched the movie or not.

Is the cost impacting ?

The overall scores are negatively correlated with the price, this is also the case for the positiveness of reviews. However this behavior is similar for both the movies and the books.

Quality of the product

One big bias that we had to talk about is the one generated by the quality of the product. With a book for example, with the same text content there are many other criteria that are taken into account in the final score (quality of the paper,format..).

Conclusion

Taking into account all the precedent points and bias, it would not sound really reasonable to give a definitive answer to our question. But it would be a shame if you made the effort to read this whole article and didn’t have the right to a small gift. So to conclude here are the top 5(according to Amazon reviews, where we make sure to have more than 8 reviewers per franchise) of :

- Books better than movies :

- Flowers in the attic

- Queen of the damned

- Timeline

- The Scarlet letter

- The Amityville Horror

- The Hobbit (we added the 6th one, so we get an answer to the title text)

- Movies better than books :

- Books that will make you give kinder reviews than for the movies

- The Picture of Dorian Gray

- The Hellbound heart

- The time machine

- Heidi

- There is no 5 because it is Queen of the damned again

- Books that will make you give more negative reviews than for the movies

Note that the provided links are not fully representatives of all Amazon products